Digital Twins of the Brain

Disrupting neuroscience research with artificial models of the brain

Francisco Acosta, Fatih Dinc, Bariscan Kurtkaya, Abby Bertics, Nina Miolane

“Neuroscience experiments are crucial to advance our understanding of the brain, but they are time-consuming, costly, and limited in scope. We propose an alternative approach: building digital twins of brain circuits using artificial neural networks, allowing us to test scientific hypotheses in silico before validating them in vivo. Crucially, our digital twins have already reproduced key findings from real, biological experiments and uncovered new hypotheses on memory and navigation, which our collaborators are confirming in living systems. Our results demonstrate that digital twins can accelerate discovery, reduce experimental cost, and provide a powerful new paradigm for probing the organizing principles of the brain.”

Updates from the Brain Twin Team

July 2025: Our workshop NeurReps is accepted at NeurIPS 2025!

June 2025: Release of short-term memory digital twin

We have released the datasets of neural data generated from our short-term memory brain digital twins.

May 2025: Our digital twin of the short-term memory is accepted at ICML 2025!

December 2024: Our digital twins of spatial navigation are at NeurIPS 2024!

Two papers from our team are accepted to NeurIPS 2024.

About Memory

Remembering a phone number just long enough to dial it is a classic example of short-term memory. Yet the neural mechanisms that allow brains to transiently store information remain poorly understood. We address this gap by building digital twins of memory circuits using recurrent neural networks. In these twins, large-scale simulations reveal candidate mechanisms, which we then distill into analytical laws that formalize when and how short-term memory can emerge. These laws are validated back in simulation and then tested in biological experiments, closing the loop between theory, modeling, and data. This digital twin approach provides a principled framework for uncovering the computational rules that let brains—and their models—hold information briefly in mind.

About Navigation

At the heart of intelligence is the ability to locate ourselves—whether in a physical environment or in mental space. While grid and place cells were first identified as critical to navigation, they represent only a fraction of a distributed system spread across many brain regions. Using our new dynamic latent-variable models (Dinc et al., 2025), we build digital twins of navigation circuits that reveal how populations of neurons jointly encode spatial and abstract maps. These models not only reproduce experimental findings but also generate testable predictions, which we validate through large-scale simulations and rodent data, advancing a unified framework for how brains—and their digital twins—construct coherent representations of the world.

Publications

Understanding and Controlling the Geometry of Memory Organization in RNNs | 2025

Haputhanthri, U., Storan, L., Jiang, Y., Raheja, T., Shai, A., Akengin, O., Miolane, N., Schnitzer, M. J., Dinc, F., & Tanaka, H

We develop recurrent neural networks (RNNs) as digital twins of neural circuits supporting short-term memory in biological brains. We show that abrupt learning on memory tasks is preceded by geometric restructuring in the digital twin’s phase space. By introducing a temporal consistency regularization, we promote biologically plausible attractor formation, providing a pathway to more efficient and interpretable dynamical mechanism of short-term memory.

Dynamical Phases of Short-Term Memory Mechanisms in RNNs | ICML 2025

Kurtkaya, B., Dinc, F., Yuksekgonul, M., Blanco-Pozo, M., Cirakman, E., Schnitzer, M., Yemez, Y., Tanaka, H., Yuan, P., & Miolane, N.

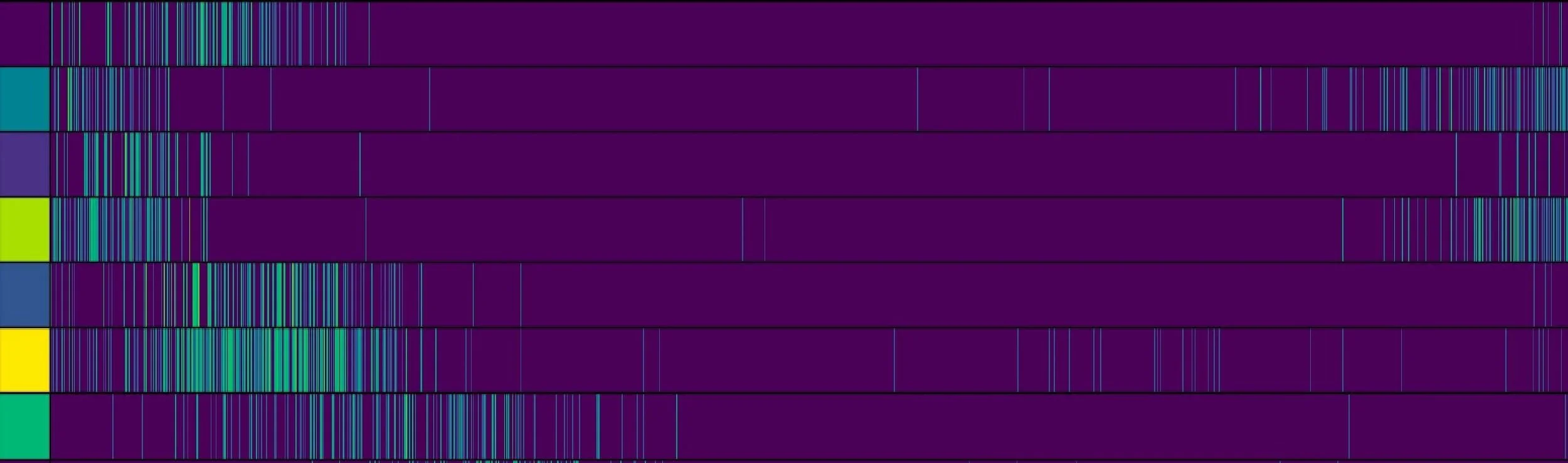

Using RNNs as digital twins of brain circuits supporting short-term memory, we uncover two distinct dynamical regimes—slow-point manifolds and limit cycles—that sustain memory capabilities. We derive scaling laws governing their emergence and validate these across 35,000 artificial neural networks, yielding both theoretical predictions and testable hypotheses for real, biological memory circuits.

Not So Griddy: Internal Representations of RNNs Path Integrating More Than One Agent | NeurIPS 2024

Redman W., Acosta, F., Acosta-Mendoza, S., Miolane, N.

We construct RNNs as digital twins of the brain circuits supporting spatial navigation. When these networks are trained to track the positions not only of themselves, but of multiple agents at once, they develop new coding strategies: weaker grid-like responses, enhanced border coding, and relative position tuning. This shows that digital twins of navigation adapt their internal maps depending on the demands of multi-agent movement, providing real, testable hypotheses for how our brains operate.

Global Distortions from Local Rewards: Neural Coding Strategies in Path-Integrating Neural Systems | NeurIPS 2024

Acosta, F., Dinc, F., Redman, W., Madhav, M., Klindt, D., Miolane, N.

RNNs trained for path integration act as digital twins of the brain’s navigation system. The artificial neural networks reproduce how grid cells globally distort their firing fields in response to local reward cues. This integration of spatial and reward information in grid-like codes establishes a computational framework for how digital twins can bridge navigation and value-based decision-making in the brain.

Contact

For any questions, please contact ninamiolane@ucsb.edu